Self-harm and suicide content shown shocks experts

All social media platforms use algorithms to assess user behaviour. However, unlike most others which initially show users content from profiles or accounts they follow, TikTok has always primarily recommended content to users based on what they have watched.

On foot of concerns published by researchers and advocacy groups like Amnesty International about young teens’ mental health being negatively influenced by content on TikTok, Prime Time conducted an experiment.

Three new TikTok accounts were created on phones with newly installed operating systems. Each time, when asked to provide the users’ age, Prime Time gave a date in 2011. As a result, TikTok understood the user was 13 years old.

Prime Time did not search for topics, ‘like’ or comment on videos, or engage with content in any similar way. We watched the videos shown by TikTok on the ‘For You’ feed, and when shown videos related to topics like parental relationships, loneliness, or feelings of isolation appeared, we watched them twice.

Within minutes, the accounts which TikTok understood to be controlled by 13-year-old users, were into a mental health content rabbit hole.

Note: This article contains references and discussion of to self-harm and suicide. Details of organisations that may assist in relation to such issues can be found at rte.ie/helplines.

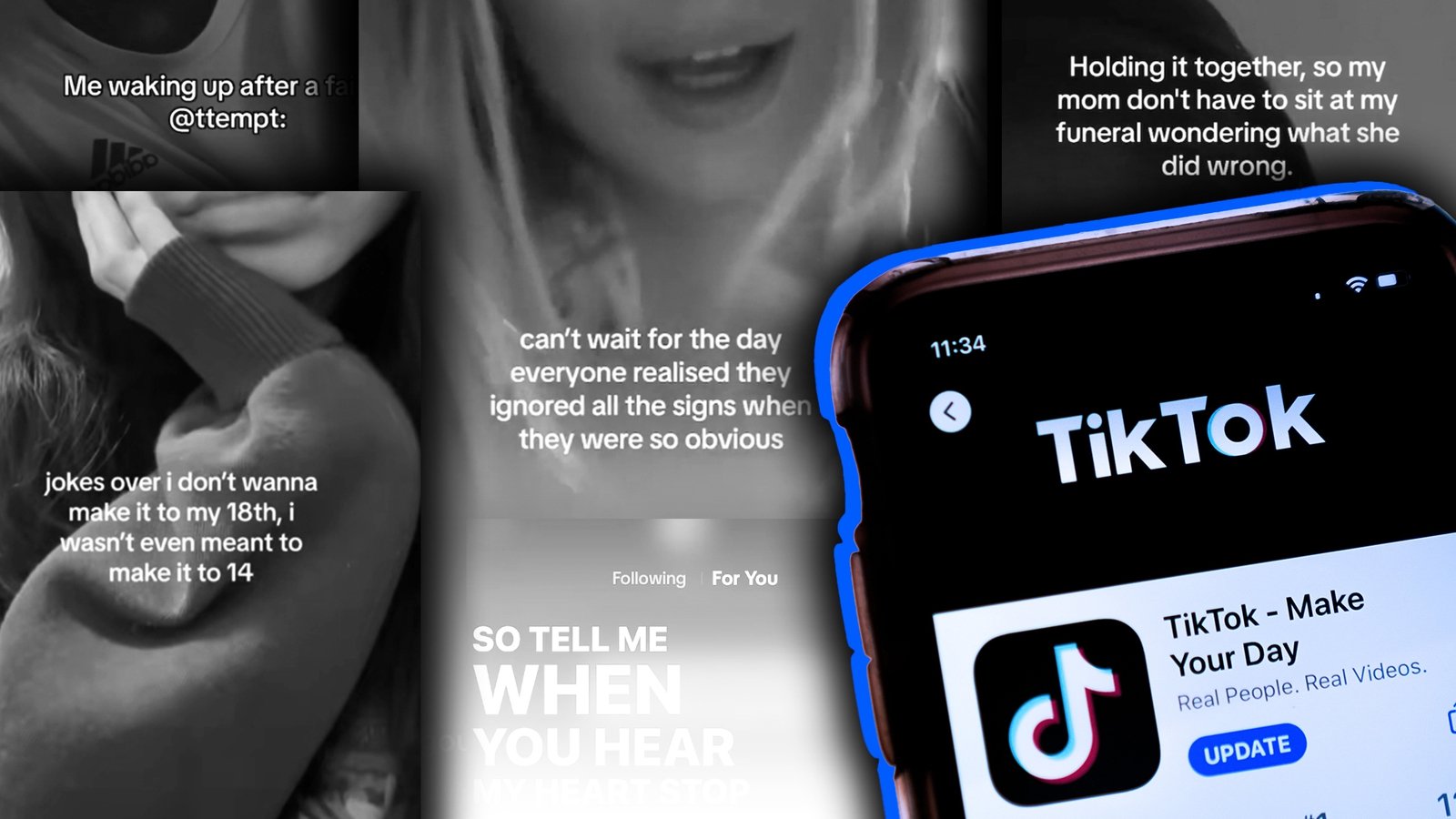

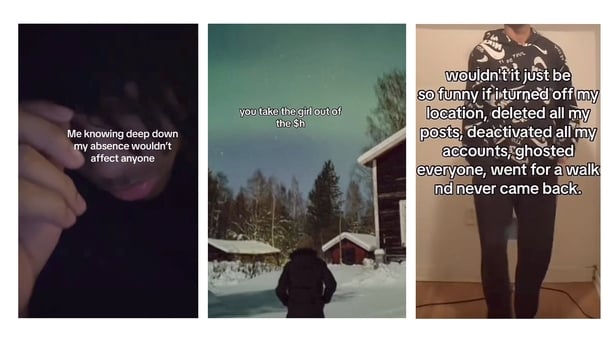

Fourteen minutes into scrolling through videos on my new TikTok account and one appears of a teenager crying in what looks like their bedroom.

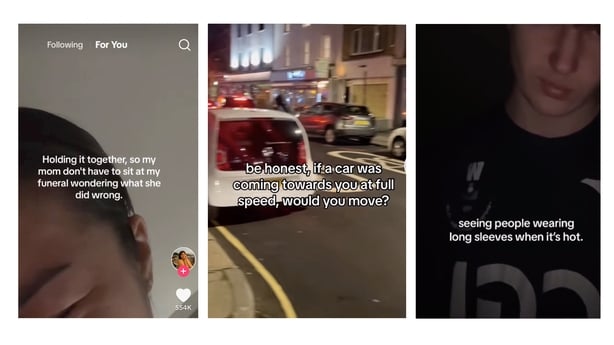

The text overlaid on the video reads ‘holding it together so my mom doesn’t have to sit at my funeral wondering what she did wrong.’

The sound with it is a song; ‘How to Save a Life’ by The Fray. There are more than 550,000 likes on the video.

It was just the latest piece of content shown in what was already becoming a stream related to depression, self-harm and suicide over the previous quarter-hour.

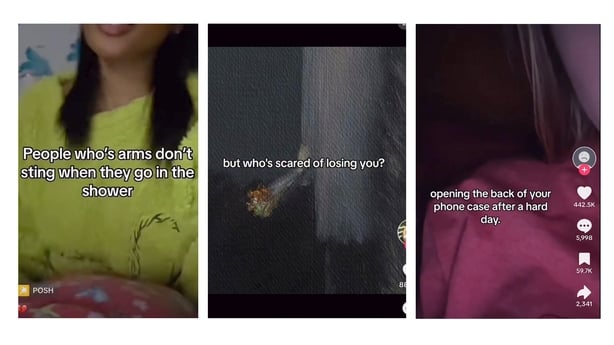

Some videos referenced self-harm scars, with captions such as ‘you know it broke me when I saw your arms,’ or ‘making eye contact with a person staring at my scars with disgust.’

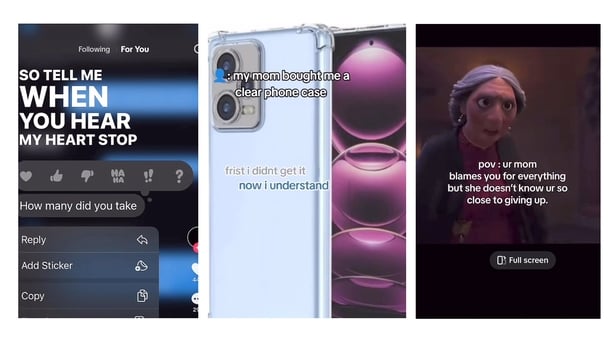

Other videos alluded to clear phone covers, a known trend on TikTok related to self-harm in young people. It references the idea that an authority figure has required the young person to begin using a see-through phone cover, to try to ensure the young person would not hide razor blades within it.

In 2023, Amnesty International, with the assistance of a Portugal-based research organisation called AI Forensics, carried out a similar exercise as part of a report examining the impact of TikTok on teens in the US, Kenya and Philippines.

Prime Time set the user locations to Ireland and recorded the videos shown for one hour on each account.

Content was then shown to the Chair of the Faculty of Child & Adolescent Psychiatry in the College of Psychiatrists of Ireland, Dr Patricia Byrne, and Dr Richard Hogan, a psychotherapist who specialises in working with families.

“I work in this area every day… but even for me, seeing that was very emotional and provokes a very strong, very strong emotional reaction,” Dr Byrne said, visibly upset.

“I didn’t expect to feel as emotional as I did watching it, but they’re very powerful imagery and very intense.”

Dr Richard Hogan told Prime Time: “I’m deflated, I’m emotional. I’m angry.”

“I find myself getting emotional watching that because I work with so many brilliant teenagers, so many wonderful teenagers who have been pulled into the murky world of serious mental health issues because of things like that,” he added, responding to one of the videos referencing suicide.

“It is absolutely suggesting suicide as a course to deal with your psychological upset there. I mean, that is, that is heinous,” he added.

Dr Byrne says adolescents seeing such content – which in some instances glamorises self-harm and references suicide – poses many risks.

“There are risks that increase negative thoughts, low mood, and it can increase the thoughts of self-harm or the risk of self,” she said.

TikTok’s success is based on the power of its recommendation system, an algorithm which analyses users’ engagement with content for indicators of interests, and uses those indicators to decide which video to display the next.

Within 20 minutes, Prime Time was being shown videos directly referencing self-harm and suicidal thoughts.

By the end of an hour of scrolling, TikTok’s recommender system was showing a stream of videos almost exclusively related to depression, self-harm, and suicidal thoughts to the users it believed to be 13 years old.

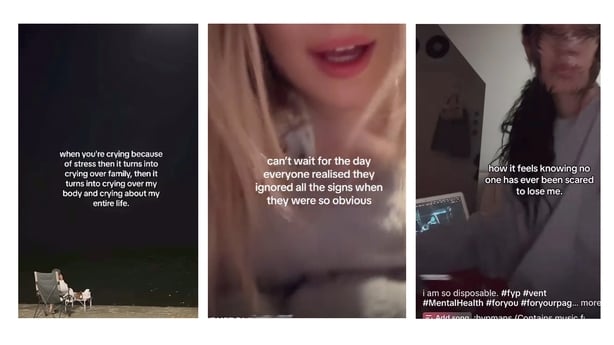

Some people who had posted videos included symbols or coded language within their captions – sometimes called ‘algospeak’ – so their posts were not removed automatically by the app. It appeared no other moderation process was functioning to block the content from reaching young users.

The automated moderation process means TikTok will not return results when users directly search terms or words like ‘self-harm’ or ‘suicide.’ Instead, the results page will advise the user of organisations which provide mental health support services.

Prime Time found many users used terms like SH, $H – instead of ‘self-harm’ to easily get around the system.

In a response to queries from Prime Time, TikTok said: “We approach topics of suicide and self-harm with immense care and are guided by mental health experts, including those we have on staff. As RTÉ found, we make it easy for people who may be struggling to access professional support, and our Refresh feature lets people change what is recommended to them.”

The ‘Refresh’ feature allows users to clear what the app understands about their profile, and begin again. It is not a moderation system.

Videos shown to Prime Time also referenced suicide and suicidal thoughts.

Typically, melancholic images were overlaid with text. Text in one video read ‘You’re scared of losing people, but who’s scared of losing you?’

A second video showed an image of an individual while the text read ‘me knowing deep down my absence wouldn’t affect anyone.’

Another had text which read ‘can’t wait for the day everyone realised they ignored all the signs when they were so obvious’; yet another said ‘me waking up after a failed @ttempt’, and ‘how many did you take.’

“The problem… is how the algorithm basically drives users from less problematic content such as sadness or romantic breakups, to more concerning content like depression,” Head of Research with AI Forensics, Salvatore Romano, told Prime Time.

He said the research they conducted with Amnesty International returned very similar results.

“Immediately this [mental health] content was recommended to us and to our accounts,” Mr Romano said.

“We were indeed concerned and surprised by the fact that this, let’s say, general topic of sadness, depression, can even lead to videos about suicide…the escalation and the radicalisation of the recommendation made by the algorithm escalated quite quickly.”

After conducting the experiment, Prime Time gave TikTok the usernames of the accounts set up. This allowed TikTok to have access all the videos that had been shown to the accounts.

We also provided TikTok with screenshots from ten example videos.

TikTok said “RTÉ’s test in no way accurately represents the behaviour or experiences of real teens who use our app… Out of hundreds of videos that would’ve been seen during RTÉ’s testing, we reviewed ten that were sent to us and made changes so that seven can no longer be viewed by teenagers.”

Dr Byrne, Chair of the Faculty of Child & Adolescent Psychiatry in the College of Psychiatrists of Ireland says the brain is in a period of massive change and cognitive development during adolescence, and as a result certain types of content can grasp their attention.

“Adolescents are designed to seek out novel experiences. Their brain is hardwired to seek out more and more experiences,” she told Prime Time.

“Their brain is wired to have more impact of emotional reasoning. So, they will feel emotions much bigger. They will get more of an impact from the dopamine, the reward centres, of hitting something that connects with them… and they’re much more likely to have a lower attention span and higher impulsivity and higher risk-taking behaviour,” Dr Byrne said.

She says a major risk is what is known as ‘the contagion effect.’

This relates to circumstances where if a young person dies by suicide and it is reported in a way that glorifies the suicide, it can lead to young people perceiving “that this is a positive way to express feelings of distress… There can be an increase in the rates of suicide above other young people in the time.”

The same risk exists for seeing harmful content, she said.

In such circumstances, self-harm can be seen as “being promoted or glorified as a way of coping” or as a “way that you can express your distress for a vulnerable young person.”

Dr Hogan says his experience from working with families in therapy sessions is that the power of social media, technology, and algorithms is having a massive impact on many families – as well as within schools where he often speaks and works.

“What I see… is that the family is falling apart, the fabric of the family, which is the fabric of society, it is falling apart because everyone’s living this separate life. They’re all consuming technology. There’s no, there’s no connection.”

“The three big things that I see in schools are self-harm, school refusal, and anxiety,” he added.

Statistics about rates of self-harm are compiled by the National Suicide Research Foundation, however, they only cover instances which result in people attending emergency departments.

Since 2002, when the foundation began collating the data, there’s been an increase in the number of presentations to emergency departments by around 18%.

What particularly concerns consultants like Dr Byrne, is the high – and growing – numbers of teenagers presenting.

“What we know is that every year the age group that has the highest rate of self-harm is those aged 15 to 19 years,” Dr Byrne said.

“But of huge concern is, over recent years, the age group with the most rapid increase in rates in self-harm is those aged 10 to 14 years. And that is really a worrying sign.”

There is no singular cause for that increase. While our research focused on TikTok, those working in child and adolescent psychiatry say the pressures on young people – in part, driven by social media in general – is a factor.

Mr Romano says the design and functionality of TikTok – and especially the way in which young people are presented with content from accounts they do not follow – is particularly concerning.

He says it is devised to “achieve the maximum engagement.”

The power of the algorithm also concerns Johnny Ryan, Senior Fellow at the Irish Council for Civil Liberties (ICCL).

“To keep the young person on the screen, the secret is to give them more and more extreme versions of what they’ve shown some interest in,” he said.

Mr Ryan and the ICCL say TikTok users should be able to search for content in a way that is not algorithmically-driven, and that the recommender systems that drive TikTok’s suggested videos should be turned off by default.

A poll commissioned in January by the ICCL and campaign group Uplift of 1,270 people said that almost 74% of respondents believed social media algorithms should be more strictly regulated.

In February, the European Commission launched an investigation into TikTok over concerns the platform is not adequately protecting children. The investigation focuses on TikTok’s design and alleged shortcomings in adhering to the Digital Services Act (DSA).

Under this Act, the responsibility for regulating large social media companies is shared between the European Commission and the regulator of the EU Member State where the service has its EU headquarters.

With its headquarters in Dublin, Ireland’s online media regulator, Comisiún na Meán, bears significant responsibility for regulating how TikTok operates in the EU.

It said in a statement that “online platforms which are accessible to minors must have appropriate and proportionate measures to ensure a high level of privacy, safety and security of minors.”

It said it is currently working on an Online Safety Code for Ireland.

“The finalised Code will apply to video-sharing platform services, including TikTok. The final Code will prohibit the sharing of content which promotes eating or feeding disorders, as well as promoting self-harm or suicide on video-sharing platforms, amongst a range of other harmful and illegal content.”

For Johnny Ryan of the ICCL, the problem is an urgent one.

“We are past the point where we’re asking these tech companies to fix the problems that they have created for our children,” he said.

“Our regulator Comisiún na Meán, has the power in law to tell TikTok or any other company in this same category, ‘stop your algorithm that is hurting our kids, that is causing self-loathing, self-hate… Switch that off.’ And it has the power to do that.”

Dr Byrne and Dr Hogan agree that regulation is the key.

“There is huge work – but a lot more needs to be done, to try and manage and regulate these social media,” Dr Byrne said.

“The government needs to bring in regulation here to robust legislation to protect our children,” said Dr Hogan.

If you have been affected by the issues raised in this article, visit rte.ie/helplines.